Evaluation Steps

The alternatives for each subfield are determined by executing the defined evaluation steps. The evaluation steps are processed depending on the order in which they are defined. If an evaluation step returns at least one alternative for a subfield, the subsequent evaluation steps are skipped. The found results are handled as alternatives for the subfield and sorted by the confidence value.

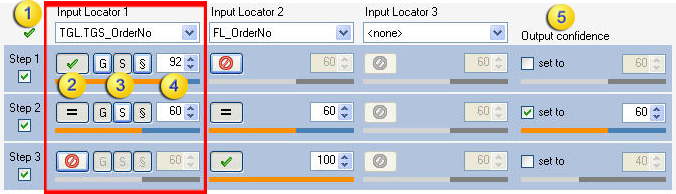

Define as many evaluation steps for a subfield as needed. When you select a subfield tab from the list on the left, an empty evaluation step is displayed. After an input locator is selected from the Input Locator list, you can configure the corresponding settings for the evaluation step.

|

|

The Use check box to easily determine whether an evaluation step is performed or not. |

|

|

The Evaluation method that defines the method if an input locator is used for an evaluation step. |

|

|

The Extraction type for an input locator can be either ruled-based, or returned from the specific or generic algorithm. |

|

|

The Input threshold to limit the number of possible alternatives for an input locator. |

|

|

The Output confidence value can be set for an evaluation step. It can be used to make sure that the results returned to the extraction field gets a specific value. |

Select or clear the Use check box to the left of an evaluation step to enable or disable an evaluation step. Add or delete steps as needed.

Locator alternatives for a standard locator, such as the Format Locator, are processed by applying rule-based algorithms. In addition, trainable locators, such as the Amount or Order Group Locator, provide results from a generic and specific algorithm.

For an input locator that has multiple extraction algorithms,

additional buttons can be used to select specific ( ),

generic (

),

generic ( )

and rule-based (

)

and rule-based ( )

algorithms.

)

algorithms.

To define the evaluation method for an evaluation step, click the

Evaluation Method button for the corresponding input locator. By default, the

button for the input locator is not selected ( ).

This means that the input locator alternatives are not taken into account for

this evaluation step. To define an evaluation step that returns all the

alternatives of one input locator whose confidence value is greater than or

equal to the input threshold (

).

This means that the input locator alternatives are not taken into account for

this evaluation step. To define an evaluation step that returns all the

alternatives of one input locator whose confidence value is greater than or

equal to the input threshold ( ),

click the button for this input locator. For an evaluation step that compares

the results of multiple locators with confidence values are greater than or

equal to the given input threshold and that returns all alternatives that are

equal (

),

click the button for this input locator. For an evaluation step that compares

the results of multiple locators with confidence values are greater than or

equal to the given input threshold and that returns all alternatives that are

equal ( ),

click the button for each input locator. To clear the selection for an input

locator, click the Evaluation Method button again.

),

click the button for each input locator. To clear the selection for an input

locator, click the Evaluation Method button again.

If several input locators are selected for an evaluation step, the alternatives of all selected input locators are compared. Only those alternatives that are found by every input locator are taken into account. An alternative that is found at the same location as all the input locators is given the highest confidence value.

The highest input confidence value of the compared alternatives in the same position is used as the output confidence. If the text is not found at the same position for all selected input locators, the output confidence is reduced. For example, if you selected three input locators and the alternative text is found at the same position for only two input locators, the output confidence value is 2/3 of the maximum original value. If you selected two input locators and the alternative text is found at the same position for only one input locator, the output confidence value is reduced by half. This ensures that those alternatives get the highest confidence value found at the same place by most of the input locators. To adapt the output confidence, set the output confidence value to the value needed. Alternatives without positioning information are used only if there are no alternatives with positioning data.