Transact reporting and workflow

Reporting consists of the following major functions:

-

Data Migration

-

Data Calculation

-

Data Cleanup

Transact Reporting also supports multiple languages as part of the User Interface. For more information, see Ephesoft Transact Technical Specifications.

Reporting process: Cron jobs

A single master cron job controls the entire reporting process from data migration to report calculations, to data cleanup. The following workflow steps are performed based on the cron job execution:

-

Activity tables are optimized and unnecessary data is removed.

-

Data used for the reports are migrated from the Application database to the Reports database and persisted to staging tables.

-

Aggregation and calculations are performed from the staging tables and the results persist in calculation tables that are used when rendering the reports.

-

The final data cleanup occurs on the Transact application tables and the staging tables used in the Reports database.

This entire sequence is executed by default every 15 minutes. This is all controlled by a cron job in the reporting property file.

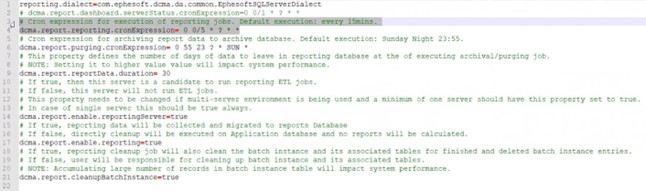

See the following image for an example of these cron jobs in the dcma-reporting.properties file and the default settings.

-

dcma.report.dashboard.serverStatus.cronExpression

# Cron expression for execution frequency of reporting jobs

By default, this is set to 15 minutes.

-

dcma.report.reporting.cronExpression

# Cron expression for archiving report data to the archive database

-

This operation should be configured to execute during off-hours (weekends, late night).

-

By default, this is set to 11:35 pm, Sunday night.

# Cron expression for archiving report data to an archive database.

-

This property defines the number of days of data to leave in the reporting database when archiving or purging occurs.

-

Changing the setting to execute more frequently may impact system performance.

-

-

dcma.report.reportData.duration

# Cron expression that defines the number of days of data to leave in the Reporting database, at the time of executing the archival or purging job.

-

By default, this is set to 30 days. Any setting reflects the number of days reporting data is retained in the Reports database before the data is migrated to the Report Archive database.

-

Setting this to a higher value may impact system performance based on how much volume in terms of throughput is being processed. Customers processing over 3000 batches per day should use the default.

-

-

dcma.report.enable.reportingServer

# Cron expression for enabling or disabling this server to run ETL jobs

-

If true, this server is enabled to run reporting ETL jobs.

-

If false, ETL jobs are disabled for this server.

-

In a multi-server environment, one of the servers should have this setting as true.

-

In a single-server environment, this setting should always be true.

-

Customers may wish to disable ETL reporting for all servers. Set all servers to false.

-

-

dcma.report.enable.reporting

# Cron expression for cleanup and reports calculation, and specifies whether reporting is executed on this server, or not

-

This setting is useful for configuring reports in a multi-server environment. This setting can prevent report calculations from being performed on all servers. Instead, calculations can be performed on just one server.

-

If true, reporting data on this server will be collected and migrated to the Reports database.

-

If false, data cleanup is executed in the Applications database and no reports are calculated.

-

-

dcma.report.cleanupBatchInstance

# Cron expression enables or disables batch instance cleanup

-

If true, the reporting cleanup cron job also cleans the batch instance and associated tables for the finished and deleted batch instance entries.

-

If false, the user is responsible to clean up batch instance data and associated tables.

-

Data migration in Transact

Data migration occurs from the following Transact tables to the Reports database:

-

batch_instance

-

act_hi_procinst

-

batch_class

-

batch_class_module

-

rp_throughput_module_data

This new table persists the number of documents and pages at the end of every workflow module in the batch workflow.

-

rp_data_corrections

This new table improves the correction reports as a part of Advanced Reporting. This new table stores JSON objects for more efficient mapping to the jobs that perform the calculations.

The default frequency of this migration cron job is every 15 minutes. After Transact migrates the tables successfully, Transact then runs the Advanced and Dashboard report jobs.

The batch.xml data that is required for reporting now persists to the Application database. The data migration job can leverage the necessary data strictly from the Application database. This makes data migration more efficient and simpler.

Data calculation in Transact

Transact performs two separate calculation jobs:

-

Dashboard

-

Advanced Reports

Data cleanup in Transact

Activiti has the following four history tables:

-

ACT_HI_PROCINST

This table is used in reporting. -

ACT_HI_ACTINST

-

ACT_HI_VARINST

-

ACT_GE_BYETARRAY

Transact includes data cleanup with the following factors in design:

-

The cleanup process is broken down into two separate jobs after the Advanced and Dashboard jobs are complete:

-

Cleanup tables in the Application database

-

Cleanup staging tables in the Reports database

-

These cleanup jobs run every 15 minutes. Once the batch instance is finished, the application deletes the history records.

-

Transact stores only relevant fields from the batch.xml file that are required for correction reports in these tables. These fields are stored as JSON objects.

-

The ApplicationcCleanup job is responsible for cleaning up Ephesoft Transact tables with batch instance tables.

The ReportCleanup job cleans the staging tables that are used during migration.

-

The jobs are executed in sequential order:

-

Cleanup Procedure: The database contains a procedure that cleans the tables in the database.

-

Activiti Workflow Engine: When executing a batch instance, Transact uses an Activiti workflow engine. Activiti creates history tables. Three history tables are not needed in Transact, so the reporting method removes them.

-

Database procedure for cleanup

Reporting cleans the Activiti history table with dbo-CAL_ACT_HI-CLEANUP procedure.

The Activiti history table consists of the following three tables:

-

dbo.ACT_HI-ACTINST

-

dbo.ACT_GE_BYTEARRAY

-

dbo.ACT_HI_VARINST

The dbo-CAL_ACT_HI_CLEANUP procedure also updates the dbo.batch_instance. Reporting uses the dbo.batch_instance column to monitor status and cleans up the batch instance in the finished status.

-

These staging tables are persisted in the Reports database and used as part of data calculation that is used for the throughput (base) and corrections reports as part of advanced reporting.

-

dbo.rp_data_corrections

Contains the batch.xml file data relative to corrections during each step of the Transact workflow

-

dbo.rp_throughput_module_data

Tracks documents and pages in each batch instance for each module

-

Recommendations for running Transact reports

This topic provides recommendations for running reports in Transact:

-

It is advisable to run the Reporting Service on a UI Server.

Running the Reporting Service on an executing server may cause delays in the Report ETL Job execution due to high CPU utilization by batches.

-

Dashboard and Standard report scripts and cron jobs have been merged into a single entity. This means both Dashboard and Throughput Reports will be updated at the same time in the UI. The Cron for this common ETL Script (Dashboard) should be frequent - every 15 to 20 minutes (the default setting is every 15 minutes).

-

The clean-up algorithm will delete data for FINISHED batches for which all reports have been successfully run (as per the license).

Along with this, XML files from SharedFolders/report-data and Activiti tables for the same batch instances will also be cleared.

-

We recommend that all cron jobs should be configured so that minimal collisions occur.

For example, the cleanup cron could be set at the 45th/50th minute of every "n" hours to ensure that the chances of another cron being fired at the same instance are less.

-

ETL scripts are memory intensive due to the frequent sorting and aggregation of data. Hence, as per the load of batches, the JVM heap memory should be configured accordingly.

Low-load customers

The following specific recommendations apply for low-load customers (below 1000 pages/day):

-

The Dashboard Cron job can be set to a more frequent interval (every five minutes).

-

JVM Heap memory does not need to be adjusted or notably increased for the reporting to function properly.

Cron timings should be exclusive of each other by ensuring they do not get fired at the same time. This can be achieved by specifying the exact minute for the cron to be triggered.

For example, 0 23 0/5 * * * denotes a cron that will be executed 23 minutes after every five hours.

Medium and high-load customers

The following specific recommendations apply for medium-load customers (1000-10,000 pages/day) and high-load customers (above 10,000 pages/day):

-

Multi-Server Setup is recommended with the Reporting Service running on a UI server.

-

The Dashboard Cron (which will update data for Dashboard and Throughput Reports) should be set to a slightly longer interval - every 15 minutes to allow scripts more time to complete.

-

JVM Heap memory should be increased in cases where extremely high numbers of batches are being processed regularly.

Cron timings should be exclusive of each other by making sure they do not get fired at the same time. This can be achieved by specifying the exact minute for the cron to be triggered.

For example, 0 23 0/5 * * * denotes a cron that will be executed in 23 minutes after every five hours.

Configuring reports

Transact allows administrators to adjust system-level configurations for reports, as follows:

-

Properties, .lgx and script files

-

Report database creation - report_create.sql

-

Data management folders

Properties, .lgx and script files

Administrators may want to adjust the Transact properties files, .lgx file, or ETL scripts for certain report functions and parameters. Possible configurations include the following:

-

etl-variables.properties

-

dcma-reporting.properties

-

application.properties

-

dcma-backup-service.properties

-

_Settings.lgx

-

ETL scripts

The following property file needs to be configured for connecting to the report database:

{Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/etl-variables.properties

By default, the property is configured to point to the report database created by Transact. If the administrator wishes to use a different database, this property file needs to be configured accordingly.

All properties are of type string.

|

Configurable property |

Value option |

Description |

|---|---|---|

|

reporting.driverClassName |

For MySQL: com.mysql.jdbc.Driver For MSSQL : jdbc:jtds:sqlserver://localhost; databaseName=report; user=<databaseUsername>; password=<databasePassword> |

Driver class for database connection. Example: for MySQL, it should be set to com.mysql.jdbc.Driver |

|

reporting.dialect |

NA |

Dialects used by hibernate to use with database. Example: org.hibernate.dialect.MySQL5InnoDBDialect |

|

reportdata.path |

NA |

Path to the folder containing files for all executed batches. Example: D:\\Ephesoft\\SharedFolders/report-data |

|

Ephesoft.loginUserName |

NA |

This property is used to decrypt report data for encrypted Batches. Please enter SuperUser Name. If encryption of property file values is enabled, this value should also be encrypted. |

|

Ephesoft.loginPassword |

NA |

This property is used to decrypt report data for encrypted Batches. Please enter SuperUser Password. If encryption of property file values is enabled, this value should also be encrypted. |

Property file: {Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/dcma-reporting.properties

|

Configurable property |

Type of value |

Value option |

Description |

|---|---|---|---|

|

dcma.report.dashboard.cronExpression |

Cronjob Expression |

NA |

Frequency with which Report Dashboard data and Throughput Report data is refreshed. Recommended value: "0 0/15 * ? * *" (Every 15 minutes) |

|

dcma.report.dashboard.server Status.cronExpression |

Cronjob Expression |

NA |

Frequency with which Server Status data is refreshed. Recommended value: "0 0/1 * ? * *" (Every minute) |

|

dcma.report.advanced.cronExpression |

Cronjob Expression |

NA |

Frequency with which Report Dashboard data is refreshed. Recommended value: "0 0 0/12 ? * *" (Every 12 hours) |

|

dcma.report.cleanup.cronExpression |

Cronjob Expression |

NA |

This cron expression governs the time period with which Batches will be cleaned from Ephesoft Transact. Any batch instance for which all three Reports have been generated, will be cleaned up when the cron triggers. (Report Data Folder, Database, Activiti Tables) |

|

application.last.version |

DO NOT alter this property. This property is managed by Ephesoft Transact to govern the upgrade status of Reports database. As an indicator, the presence of a dollar sign ($) before the application version confirms that upgrade process completed successfully |

Property file: {Ephesoft-Home}/WEB-INF/classes/META-INF/application.properties

|

Configurable property |

Type of value |

Value option |

Description |

|---|---|---|---|

|

enable.reporting |

String |

True or False |

Whether backup report-data files are created. |

Property file: {Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-util/dcma-backup-service.properties

|

Configurable Property |

Type of Value |

Value Option |

Description |

|---|---|---|---|

|

backup.report_folder |

String |

NA |

Path to the folder containing back up files for all executed plugins for batches. Example: C:\\Ephesoft\\SharedFolders/report-data |

Lgx file: {Ephesoft-Home}/EphesoftReports/_Definitions/_Settings.lgx

This file contains connection details to connect with the existing report database and the error location path. The report database can either be in MariaDB or SQLServer. Follow these steps to configure this file:

-

Define the following parameters to establish the connection:

-

Connection Id

-

Type (SQL Server or MariaDB)

-

Port Number

-

Username

-

Password

-

Database name

-

Server name

-

-

Specify the error location path as follows: {Ephesoft-Home}/EphesoftReports/logs/dcma-report.log

ETL Scripts: ETL Scripts (running in the background to process data) are stored in the following locations:

-

{Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/Dashboard

-

{Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/Standard

-

{Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/Advanced

Scripts must be switched if the administrator needs to change the Reporting Database from MS SQL to MariaDB, or vice versa.

The ETL scripts for each respective database are at the following locations on the Transact server:

-

MS SQL

{Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/MSSQL

-

MariaDB

{Ephesoft-Home}/WEB-INF/classes/META-INF/dcma-reporting/MariaDB

-

Encrypted Batch Class configuration

The configurations are stored in the following file:

{Ephesoft Installation Directory}\Application\WEB-INF\classes\META-INF\dcma-reporting\etl-variables.properties

The following two properties are added for Encrypted Batch support:

-

ephesoft.loginusername=<Username for which Ephesoft Web Services are authorized>

-

ephesoft.loginpassword=<Password for the above Username>

The user can support encryption for the above properties by using the following settings:

-

A combination of encrypted strings from Ephesoft Encryptor (<Ephesoft Installation Directory>\Application\native\encryption\Encryptor.bat)

-

Encryption switch in <Ephesoft Installation Directory>\Application\WEB-INF\classes\META-INF \dcma-encryption\dcma-encryption.properties.

Export options for reports

The following export options are available at the bottom of the widgets for all reports:

-

Export PDF: User can export the report in PDF format.

-

Export Excel: User can export the report in excel format.

-

Print Report: User can open the report to a new tab/window and then print the report.

Failover mechanism for reports

Reports are supported for a multi-server Transact environment. Reporting is accessible on all servers.

The user interface of Reports is present and hosted on all servers. Therefore, you can assume that if Transact is running on a machine, Reports are accessible via localhost Ephesoft Transact Application.

The Back-End (ETL Scripts) is governed by failover mechanism. ETL Scripts are stored at a shared location and only one server can run these scripts at a time. If that server shuts down for any reason, Ephesoft Transact Heartbeat triggers the failover mechanism and the ETL Scripts service are assigned to one of the remaining servers.

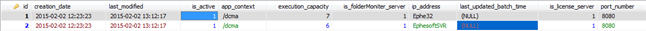

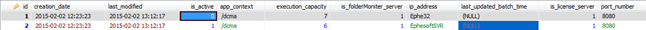

The following server_registry table displays the ETL Service running on Ephe32 system.

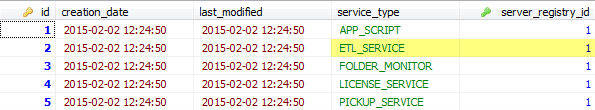

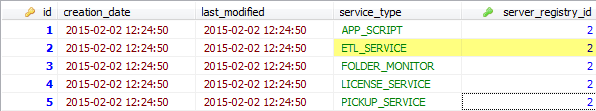

The following service_status table displays the server_registry_id.

In case of server failure, when the failover mechanism takes over, ETL Service is assigned to EphesoftSVR as displayed in the following server_registry table.

The following service_status table displays updated server_registry_id.

Last sync time

All Transact reports display the last sync time with the Transact server and services in the following format:

YYYY/MM/DD HH:MM:SS

Purging mechanism

A purging mechanism archives data periodically in Transact for reporting. The frequency of this archive process can be controlled by the user.

The user can control the amount of data to be archived based on how old it is.

Configurations

The user can control the purging mechanism using the following parameters:

-

Location: <Ephesoft Transact_Home>\Application\WEB-INF\classes\META-INF\dcma-reporting

-

File name: dcma-reporting.properties

-

Property name: dcma.report.purging.cronExpression (Default Value=Every Third month on the 1st Day at 12am)

This property can be configured to schedule the Purging Job using regular cron expressions.

-

File name: etl-variables.properties

-

Property Name: dcma.report.reportData.duration (Default Value = 30 days)

As an example, if the value of this property is set to 90, this means that at every scheduled purge cycle, data older than 90 days is archived. Recent data (<90 days) is retained.

-

If a user wants to archive the complete data every time, they can set the value of the property to 0.

The reporting.purge_duration property denotes the time period (in days) prior to which all data is purged.

As an example, if the purge_duration = 5 days, batches with creation_date older than Current date are purged.

-

Taking current date as 06-06-2016 5:00pm.

-

Batch Instance with creation date = 30-04-2016 1:00pm is Purged

-

Batch Instance with creation date = 31-04-2016 1:00pm is Purged

-

Batch Instance with creation date = 31-04-2016 8:00pm is NOT Purged (number of days = 5, taking difference of 06-06-2016 5:00pm and 31-04-2016 8:00pm)

-

Batch Instance with creation date = 01-06-2016 1:00pm is NOT Purged (number of days = 5, not greater than 5)

-

Batch Instance with creation date = 01-06-2016 5:30pm is NOT Purged

-

Batch Instance with creation date = 03-06-2016 1:00pm is NOT Purged

-

After purging, the Dashboard data is recalculated for remaining data.

Steps to use the purging mechanism

Perform these steps to use the Purging mechanism:

- Configure dcma.report.reportData.duration according to this requirement.

-

Configure dcma.report.purging.cronExpression according to this requirement.

As a result of these configurations, the following processes are enabled:

-

After every purge cycle, data will be moved to archive database.

-

All remaining reports will be recalculated on the basis of the remaining data in the original Reports database.

Printing reports

The Print feature enables the current report to be copied to a new tab or window. The user can then use the print dialog on the new tab or window to print the current report.

A specified time delay is added before the report gets printed so that the charts are fully displayed on the new tab or window before being printed.

Reports database reporting views

The following views are leveraged to perform on the fly calculations as the source of the corresponding reports:

|

Aggregated Data View Name |

Description |

|---|---|

|

batch_count_per_priority_1_v |

Batch count per priority |

|

batch_count_per_status_1_v |

Batch count per status |

|

batch_per_priority_v |

Batch per priority |

|

batch_per_priority_status_v |

Batch per priority status |

|

review_validate_backlog_1_v |

Review Validate backlog view |

|

review_validate_backlog_dtls_v |

Review Validate backlog details |

The following are applicable for Transact 2020.1 or above:

|

Aggregated Data View Name |

Description |

|---|---|

|

batch_class_ppm_v |

Batch class pages per minute |

|

module_duration_v |

Module duration |

|

review_validate_module_dur_v |

Review Validate module duration |

|

review_validate_plugin_dur_v |

Review Validate plugin duration |

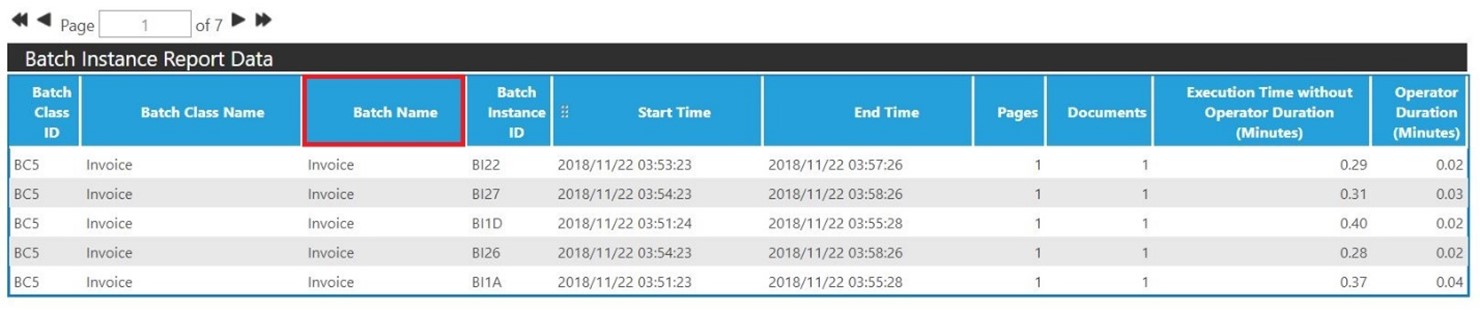

Batch Name column

The Batch Name column in the Batch Instance Report Data table contains names for the specific batch instance being run. The batch names in Batch Name and Batch Class Name may differ. An example is shown below.